At TechChange, we pride ourselves in teaching our participants the crucial skills needed for a career in social good. And how do we find out what those skills are? We go straight to the source! In our new M&E Professionals series, we’ll be talking one-on-one with the pros who are recruiting for these very positions.

2015 was marked as the International Year of Evaluation, so it’s no wonder that M&E is increasingly becoming a sought after skill in many organizations today. We spoke with Michael Klein to learn more about the ideal skills a M&E professional should have and how to get them:

Michael Klein is the director of International Solutions Group (ISG), a company that works with governments, U.N. agencies, international organizations, NGOs and other companies to improve the implementation of humanitarian aid and development programming projects.

Mike’s work is at the self-described intersection of ‘old-school’ M&E and ‘new-school’ ICT, working with partners to build on established M&E strategies, streamline data flows and analysis systems, facilitate access to key information, knowledge, reporting and data in a fast, reliable and secure manner.

Q: It’s an exciting time to be an M&E professional. Do you see a big need for young professionals who are trained to take on this kind of work?

M: Yes, it is definitely an exciting time to be in my field. Just looking at the types of conferences and forums being held on the subject, it’s really clear that M&E is a rapidly developing focus in our field. Specifically, I see M&E growing in two separate, but overlapping, areas.

Standard M&E careers: If you were to search job opportunities listed on Devex or Idealist, they are the typical of M&E positions you would find, ones that take a traditional approach (i.e M&E personnel are used to provide managers the with analysis and data they request.) These opportunities are certainly growing, as organizations will always need highly trained staff help to address their M&E.

Beyond the standard label of M&E: Just as in other sectors, skillsets such as analytics, knowledge management, data collection, and information sharing are highly valued in M&E, and the field is increasingly embracing individuals who have these skill sets. This is especially true for people who understand analytics, and how data can be collected, used, and analyzed.

As more and more players in the field are using new technologies and tools for data collection and analysis, a college graduate or young professional entering the field of M&E has a great opportunity to make his or her mark on the industry by leveraging his or her digital knowledge to provide guidance to some of the most prominent development organizations.

Q: What do you look for when hiring?

M: First off, I think it’s important to have a passion, an area of expertise that you enjoy. As a profession, M&E can take you in myriad directions, and it is important to identify what type of work in which you most want to engage. A strength of my team at ISG is that everyone has differing professional interests, ranging from gender equality to how ICTs can catalyze development. Having these range of specialties strengthens what we can offer clients, and when looking at new hires, I look for individuals who are already established or are on their way to becoming an expert in a field or sector.

Aside from passion and subject-matter expertise, people need to appreciate the big picture. When we look to make hires, we want an individual who understands what organizational performance means, ranging from back-office activities such as business development and marketing, all the way through to front-line programming at the field level. If you’re interested in M&E, you have to understand that organizational effectiveness is made possible by a complex interplay of many elements. Having that general appreciation for how organizations function, the types of struggles they face, and how you can improve upon their performance, are keys to success in this field.

Mike with his group at ISG

Mike with his group at ISG

Q: What does career progression look like for someone in M&E?

M: The reason I was drawn to this field is that there is no set path. Before working in M&E, I worked in Mergers & Acquisitions (M&A), which attracted me for similar reasons. When I worked in M&A, my clients represented a wide range of sectors, and I worked with management to help these organizations restructure, fundraise, find new investors, and generally position themselves to be more successful at what they were already doing (sound familiar?). Monitoring and evaluation is no different. People come to the field from a variety of different backgrounds—such as corporate finance like me, IT, agriculture, and plenty of others, including academic programs focused on M&E—and they serve clients representing the same diversity.

Because everyone enters the field with different skills, it is hard to say exactly how one progresses in the field. Someone entering the field after studying M&E at a university will likely have a fairly technical background may take a M&E support role within a larger organization and begin to assume more responsibility over the years. Whereas, if you’re transitioning into the field with an already established skill set from a different sector, you’re likely to take a different direction and provide either consultancy services or specific project-level guidance related to your expertise.

Q: What role do you think technology plays in M&E?

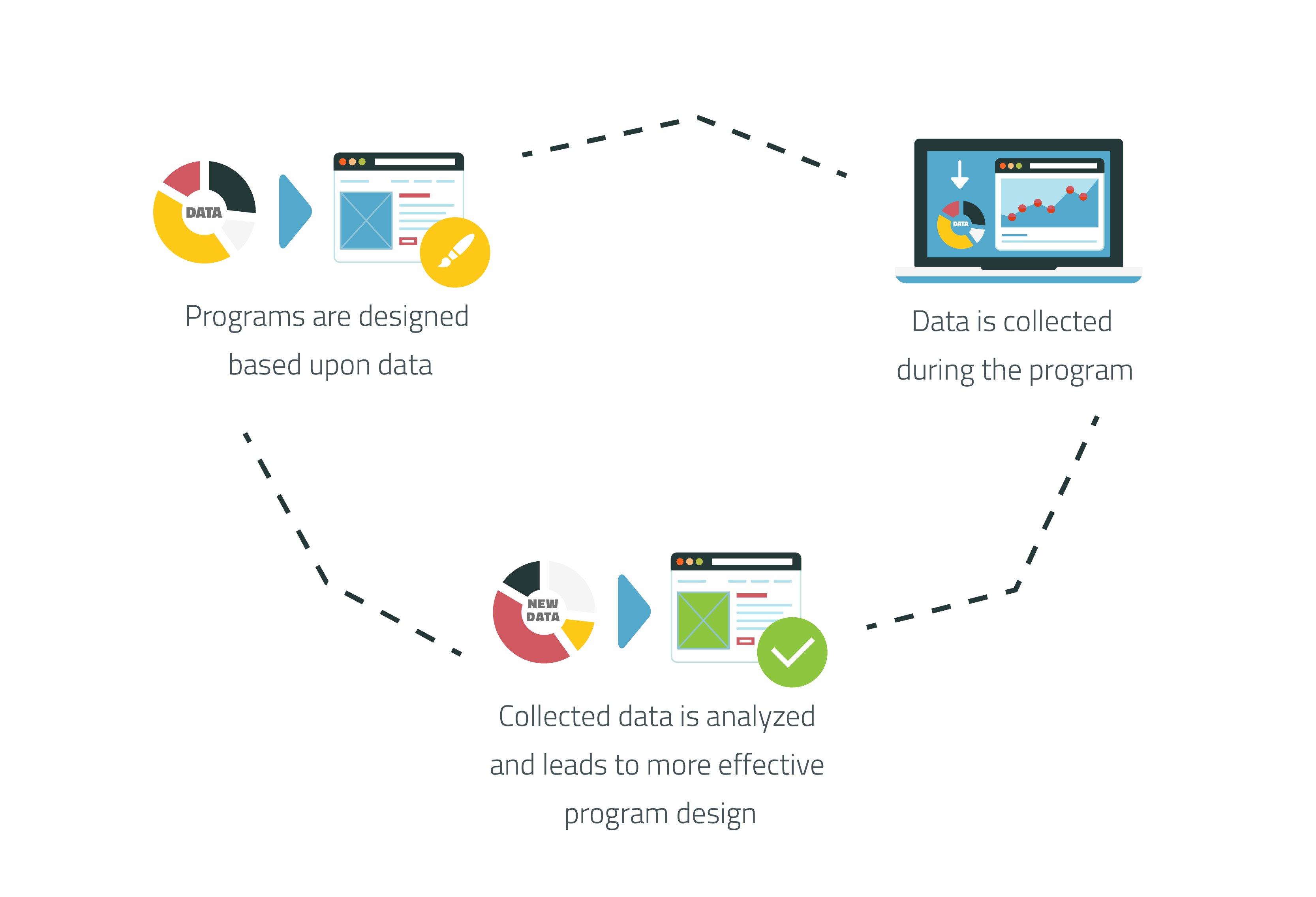

M: My personal take is that technology in and of itself is not necessarily transformative. Whether M&E is done on pen and paper or done using state-of-the-art IT solutions, good M&E is good M&E. However, technology has allowed organizations to quickly come up to speed with regards to implementing more robust approaches to M&E. If an organization is just shaping their M&E approach, using a technology solution can offer ease and expediency. The way most of these tools are constructed is based on industry best practices, so their structured, hierarchical approach is what we think of as good M&E. Clients who use these tools are then trained to capture their data in a systematic way that has the end in mind.

A lot of our clients initially say, “I really like that heat map,” or cite another specific visualization they see proved by an M&E tool and ask how they can create it for their program. This then launches into a discussion about how to collect data in a way that can deliver these types of reporting and highlight what is most important. It is much harder to go the other way.

Q: Any other pieces of advice you have for people considering the TechChange diploma in Tech for M&E?

M: Know how your study applies to what you want to accomplish and set some goals for yourself before you get into the nuts and bolts of the diploma program. If you come to these courses with a general idea of what skill gaps you want to address, you’re going to be very well placed to make the best use of the TechChange diploma program.

When I was taking one of TechChange’s M&E courses, I knew that I wanted to leverage the new skills I was developing to enhance my company’s marketing efforts. Thus, when I studied data visualization, I created multiple infographics for ISG, using tools that I would not have come across on my own. This was one of the best parts of the class: discovering cutting-edge tools in the field that I could utilize immediately.

———————————

That’s all for this installation in our M&E Professionals Series! Be sure to check out our Technology for Monitoring and Evaluation diploma program – deadline to enroll is September 4!